The Attallah College maintains a Quality Assurance System (QAS) to inform continuous improvement based on data and evidence collected, maintained, and shared. Data inform practices and procedures and provide the basis for inquiry, additional data collection, revisions to programs, and new initiatives. Data and evidence are used to improve programs, consistency across programs, and to measure impacts of programs and completers’ impact on P-12 student learning and development.

Informing Continuous Improvement. The Attallah College at Chapman University is under continuous review at several levels. First, the College is developing its use of data decision making. Beginning in 2016 the college instituted program Annual Reports as discussed in Standards 1.2, 5.3 and 5.4 that serve as opportunities for programs to review their program specific data and compare assessment cycle (term) over cycle (term) data to identify trends, challenges, and areas for improvement. These reports alongside the University required program Annual Learning Outcomes Assessment Reports (ALOAR) serve as the analysis portion of the comprehensive quality assurance system. Taken together these two pillars serve as the foundation for continuous improvement and quality assurance in the Attallah College.

Data and Evidence:Collected, Maintained, and Shared. Data are collected, monitored, stored, reported, and used by stakeholders both within and outside the College. Collecting evidence is done by the Office of Program Assessment and Improvement and shared with all stakeholders. The ongoing collection of evidence in response to the California Standards and through the alignment of the CAEP standards through the self-study process has illuminated many strengths of our quality assurance system. Data collected and supplied to programs for these reports are based on three factors. First, we base data collection on program specific needs, for example, redesigning our End of Semester Surveys to gain a clearer understanding of students’ perceptions of course utility versus access to resources (see end of semester survey). We base our data collection on state and federal requirements, for example, to ensure we are complying with candidates gaining fieldwork experience in diverse classrooms (initial completers) and schools (advanced completers) (2.1 TE Appropriate Placement Table 2017-18 and A2.1 Counseling Placements MOUs). As described in program standard 5.1 these data provide the foundation for our program and college wide analysis.

Informing Practice. Through this process we have been able to identify where programs are using data well for continuous improvement and innovation and as well as areas for continuous improvement. Through the conjoined State of California and CAEP self-study process, for example, attention to areas where more or better data and evidence are needed were exposed. Further, areas of inconsistencies across programs and became apparent. Also, further improving reliability and validity of instruments as well as transparency and publicity regarding what data are available should be improved.

5.1 Quality and Strategic Evaluation. The provider's quality assurance system is comprised

of multiple measures that can monitor candidate progress, completer achievements,

and provider operational effectiveness. Evidence demonstrates that the provider satisfies

all CAEP Standards.

The Attallah College QAS has been evolving through the process of systematic and reflective

thinking focused on continues improvement. The Attallah College developed and first

implemented a comprehensive evaluation system in 2011, called the Program Improvement

System for the College of Education Studies (PISCES). PISCES was intended gather multiple

types of data from multiple sources so that faculty members can better understand

how students experience Attallah College programs, make decisions that will improve

programs, and ensure that students meet the highest standards of professional preparation.

It was designed to compile and report data on all program improvement targets and

claims for all areas of review on an annual basis. The data were compiled across the

Unit by area of review and disaggregated by program area within each area of review.

In 2015, the faculty decided to re-align data types and collection strategies to more appropriately respond to different and changing program-specific accreditation standards. Many of the data types remained the same as those identified in PISCES, but each program designed the data items to address program-specific standards.

In fall 2016, we started implementing the QAS that we currently use and that is aimed at informing continues improvement. The System is evidence based and comprised of multiple measures that monitor candidate progression through programs, completer satisfaction and achievements, and college overall effectiveness at multiple points. The Attallah College Office of Program Assessment and Improvement collects data from multiple sources, stores, organizes, and supplies data to the programs across six assessment areas that are aligned with the CAEP standards:

- Admission

- Student Progress and Support

- Student Performance

- Clinical Experiences

- Graduate Outcomes

- Program Review and Improvement

This QAS was developed to provide a process for continuous review and assessment of candidates, faculty, programs, and Unit operations. The annual review process involves needs assessment, improvement planning, collecting and reviewing formative data, assessing annual objectives, and the formal aggregation, disaggregation, and analysis of data for the next cycle. Candidate assessment data are collected and reviewed regularly to ensure appropriate progress in meeting college proficiencies, state competencies, and national standards. This process includes the review data from entrance requirements, course-based assessments described as critical tasks such as field experiences, culminating experiences, exit requirements, and alumni follow-up reports. Candidate data are also used by faculty and administration to assess the effectiveness of programs, teaching and unit operations in preparing candidates. Thus, faculty and administration develop improvement plans based in large part on candidate data.

Table 1 provides a summary of the evidence that we collect, data collection methods as well as the reporting schedules for each area of review.

For each area of review, we have articulated a series of questions that drive our data and evidence use efforts and are summarized in Table 2.

5.2. The provider’s quality assurance system relies on relevant, verifiable, representative, cumulative and actionable measures, and produces empirical evidence that interpretations of data are valid and consistent.

Data are collected, monitored, stored, reported, and used by stakeholders both within and outside the college. Collecting evidence in response to the State of California Standards and reflection through the CAEP self-study process has illuminated many strengths of our quality assurance system and where programs are using data well for continuous improvement and innovation as well as areas for continuous improvement.

Through this self-study, for example, attention to areas where more or better data and evidence are needed were unveiled. Further, some lack in consistencies across programs as well as a need to improve instrument reliability and validity became apparent. Additionally, we have identified a need to improve transparency and publicity regarding what data are available and how data are shared.

The QAS is under continuous review at several levels. The College maintains the QAS to inform continuous improvement based on data and evidence collected, maintained, and shared. Data inform practices and procedures and provide the basis for inquiry, additional data collection, revisions to programs, and new initiatives. Data and evidence are used to improve programs, consistency across programs, and to measure impacts of programs and completers’ impact on P-12 student learning and development.

Relevant and Verifiable. While we find our data shared, analyzed, and referenced throughout standards 1-4 are relevant, our instruments are designed to meet the needs of our programs and the mandates of the state of California, the Department of Education, and CAEP while minimizing the impact of data collection on students, graduates, staff, and faculty. Over the course of the past two years (2016-2018) we have worked to develop shared (cross programmatic) objectives for data collection (see tables 5.1 and 5.2). Once we set objectives we began to systematize instruments across programs. These are the instruments we have been using over the course of the two-year timeframe (See table 5.1 and 5.3). Further, we are just beginning to settle on standardized data collection timelines, instruments, and methods. In addition to the College wide data we also co-create program specific instruments (A1.2 & 1.2 Program Annual templates). As a result, we have not as of yet, undergone systematic verifiability reviews. In the Fall of 2019 we intend to pilot test our instruments with subject matter appropriate participants reviewing the findings from the pilot to make necessary improvements to our instruments, repiloting improved instruments if necessary.

We will not be piloting these instruments prior to the fall of 2019 due to the fact that the State of California set new standards for Single Subject and Multiple Subject Credentials that went into effect July 1, 2018 (impacting our incoming August 2018 cohort). As a result, many instruments will be updated to reflect these standards. Also, in the fall of 2018, the state of California released updated Education Specialist standards that will go into effect by Summer and Fall of 2021 requiring our program to redesign to ensure our candidates meet the updated standards further resulting in a redesign of data collection instruments. Finally, it is significant to mention that as our University and College focus on small classes offering a personalized education for our students, our populations are small and as a result we will often lack large enough sample size to garner statistically significant findings.

Representative. As mentioned above, our enrolled, candidate, and graduate student numbers are smaller

and as a result we strive to collect data on all participants in all categories. Where

we can lack representative data is when you disaggregate our data by subcategory wherein

we may only have one student in that category rendering the data insignificant, yet

we do consider the data as part of our triangulation of data. We are currently in

the process of delineating in all of our data results what the sample does and does

not represent to promote clarity of analysis and program improvement.

Cumulative. As mentioned above, the College has been actively focusing on systematic data collection

since 2016. As a result, we have been able to share at 3 cycles of data[1] on the

following data collection instruments:

- End of Semester Student Feedback Survey (All Programs, modified each year)

- Exit Survey (All Programs)

Counseling Students

- Fieldwork Supervisor Feedback Survey

Teacher Education Students (standardized 2016 to be updated in 2019 and again in 2021 for Special Education)

- University Supervisor Feedback Survey

- Master Teacher Feedback Survey

- Competency Survey

All Faculty (modified in 2017)

- Full-time Faculty Reflection on Course Evaluation & Teaching

- Part-time Faculty Reflection on Course Evaluation & Teaching

Teacher Education Faculty

- Professional Disposition Evaluation*

- Student Check Survey

- Faculty Participation in Public Schools Survey*

University Supervisors

- Student Teaching Interim Evaluations

- Student Teaching Formative Evaluations

- Student Teaching Summative Evaluation

- Professional Disposition Evaluation*

- University Supervisor Evaluation of Mentor Teacher*

Master Teachers

- Mater Teacher Information Survey

- Student Teaching Observation & Evaluation Report

Alumni

- Graduate Outcome Survey[2]

Figure 5.3. 2016-2018 Attallah College Data Collection Instruments

Actionable & Consistent. All College wide data are provided in accessible to faculty and administrators in

a form that guides programs through evaluation and modifications. These actionable

data are provided along with tools (See ALOAR and Annual Report Templates) designed

to promote program faculty discussion and program improvement. Further, the Dean,

Associate Deans and the Director of Program Assessment and Improvement review all

annual data and discuss areas where evidence indicates there is a need for college

wide improvement. Further, in the monthly Program Coordinators meetings each program

coordinator provides verbal feedback on the usefulness and focus of college wide data

collection methods and outcomes. For example, in 2018 in a program coordinators’ monthly

meeting faculty and coordinator briefed the Director of Program Assessment and Improvement

of expressed frustration that the program improvement office had administered end-of

semester student feedback survey at a time that was too late in the semester to garner

sufficient number of student responses. As a result, the Office of Program Improvement

shifted the end-of-semester survey timeline administering the survey a week earlier

improving college wide response rates. Once we have collected data for multiple terms

we will assess the response rate of the new timeline and share this data back to the

program. These meeting conversations serve as additional guidance for areas of improvement

in data collection and presentation for all programs.

As mentioned in previous sub-standards, we are currently undergoing a teacher education

program update due to the state of California updating standards for programs we also

plan to update our quality assurance measures. Our goal would be to utilize tools

that are compatible with the California teacher education context and that have been

demonstrated to be both reliable and valid. In the absence of these appropriate measures,

we will continue to develop tools on our own increasing our focus on reliability and

validity in this fluid atmosphere of teacher training in our state.

5.3+ Continuous Improvement.The provider regularly and systematically assesses performance against its goals and

relevant standards, tracks results over time, tests innovations and the effects of

selection criteria on subsequent progress and completion and uses results to improve

program elements and processes.

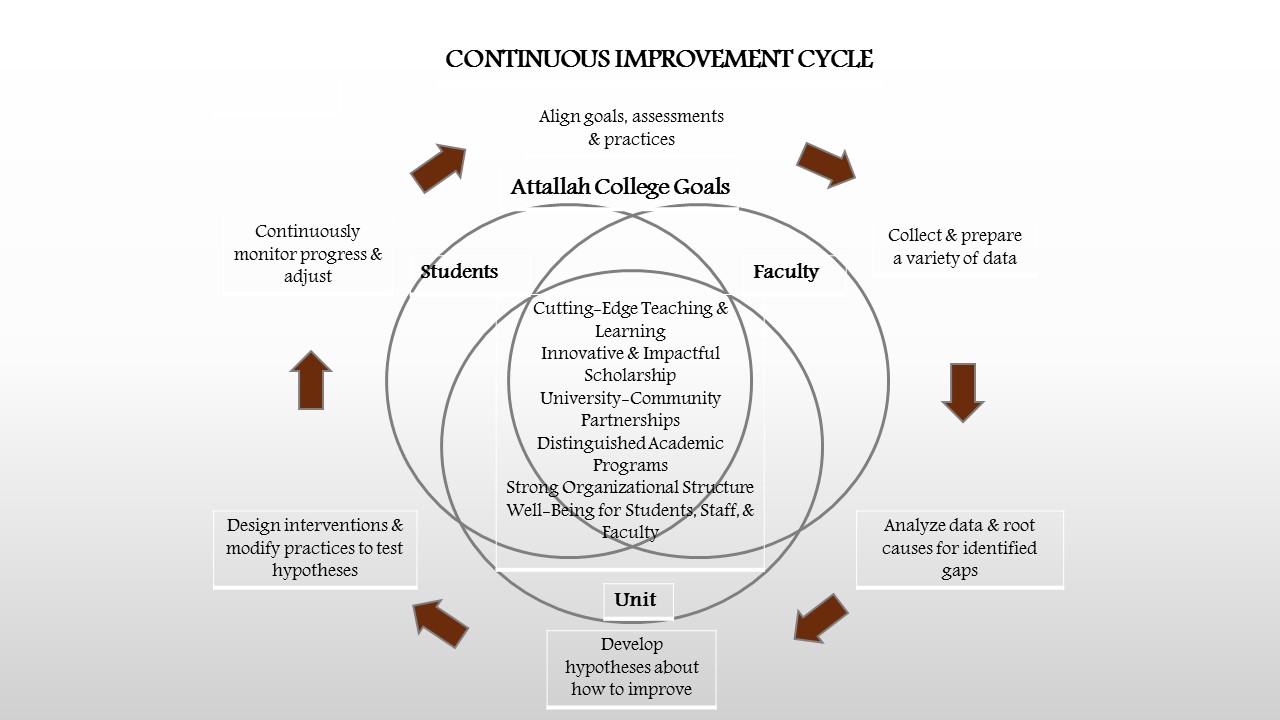

Figure 1. Attallah College Continuous Improvement Cycle

As illustrated in figure 5.1 the Attallah College has adopted a continuous improvement cycle that is founded on our goals. Within this cycle, each member of the college plays specific and important role in contributing to improvement. The focus of the Program Improvement and Assessment team is to guide, collect, clean, prepare, and share data, as well as supporting the phases along the ring of the figure. Each program faculty member contributes to the improvement cycle by contributing within their unit to target setting and planning, implementing what is planned, reviewing and analyzing data to evaluate outcomes. Students participate by providing ongoing feedback regarding application, admission, orientation, course work, support services, fieldwork, and overall program experience feedback. Students further support the continuous improvement cycle by serving indicators of program design and execution as we monitor program inputs (applicant number, quality, and inclusiveness) and outcomes (program contribution to career and PK-12 student success, placements, and retention) to guide the input and outputs of the programs. Next, all faculty leadership contribute by sharing within and across programs data based best practices that are then adopted into action. Once program improvements are put into action we return to our plan, do, study, act, evaluate cycle.

As discussed in standard 5.2 the college has developed a consistent and routine continuous improvement cycle through our program annual reports (Standard A1.2 and 1.2). These reports were initially developed as standardized instruments, but have been evolving through the past two years 2016-2018 to reflect program specific goals. We began in 2016 collecting baseline data across the college (See Figure 5.2 above). Over the course of these two years, we have realized that the college will continue to collect the standardized evidence referenced in Figure 5.1, 5.2 and 5.3, yet we may need to collect different/additional data based on program assessments and needs. As a result, as we move forward we are hoping to customize each programs’ annual report to reflect program specific goals over time. For example, as the initial programs are undergoing programmatic updates based on new state teaching standards we will discuss what evidence is currently collected and what evidence the program would like to collect to ensure they can make data informed decisions moving forward.

5.4+ Continuous Improvement.Measures of completer impact, including available outcome data on P-12 student growth, are summarized, externally benchmarked, analyzed, shared widely, and acted upon in decisions making related to programs, resource allocation, and future direction.

To demonstrate completer impact on P-12 student growth the college is developing studies that focus on multiple measures (As outlined in planned study design below). Current research supports multiple measures are necessary to demonstrate teacher effectiveness on student growth (Chaplan, Gill, Thomkins, Miller, 2014; Corcoran; 2010; Mangiante, 2011). There is a lack of evidence to support the utilization of a single measure, for example standardized tests scores, to demonstrate the value-added (VA) of individual teachers on students' long-term outcomes (Baker et. al. 2010; Bill & Melinda Gates Foundation, 2010; Cocoran 2010; Hill, 2011). Further, studies have shown that issues such as a student attending a school in a high-poverty area is more likely to score below proficient, and as a result is required to make larger gains, than their peers who attend schools in low-poverty areas to demonstrate growth (Barnum, 2017). Accordingly, California has developed a data Dashboard currently focused on assessing district and school level effectiveness and utilizing multiple measures.

The California Dashboard shows progress, or lack of it, on multiple measures. This database shows measures of achievement on six measures, in color codes selected by the state. Those measures include career and college readiness, chronic absenteeism, suspension, English Language Arts, Math, and high school graduation rates. According to the California Department of Education, the Dashboard contains reports that display the performance of local districts, schools, and student groups on a set of state and local measures to assist in identifying strengths, challenges, and areas in need of improvement. Further, the state specifically does not provide teacher level data either to the public, nor to institutions of higher education. As this dashboard was just completed on December 6, 2018 the college has not had the time to review the potential these Dashboard data can provide for in our continuous improvement process for our teacher preparation programs.

Study Plan. At this time, the Attallah College has developed a study plan to demonstrate completer impact on P-12 student growth. Research Questions:

- To what extent are Attallah College (MAT, MACI, SPed) program completers contributing to expected levels of student-learning growth?

- In what ways does Attallah College’s teacher preparation program contribute to student-learning growth?

- In what ways can Attallah College’s teacher preparation program improve program execution to support stronger student-learning outcomes?

Goal: To identify Attallah College teacher education strengths and weaknesses, points where program changes might be made.

Methods: We intend to collect impact on P-12 learning and development data through two separate methods. First, we propose a select a sample[1] of Attallah Graduates that would be willing to participate as case study sites. Second, we will request program completer volunteers who may be willing to conduct an action research project during their first and/or second year of teaching. For study methods, we will be requesting participation during teacher candidates last semester of their teacher education program with the goal of ensuring we have a sample of active participants moving into teaching. We will stay in close contact with our volunteers between program completion and employment to improve response rate of study volunteers.

Once program completers have been hired we will work with study volunteers and employer districts to support district and school level study approvals.

At the close of academic year 2019-20 we will analyze the results of these studies with two main goals. First, deepening our understanding of the connection between program design/implementation and P-12 student outcomes. Second, ways in which these studies maybe adjusted moving forward to collect data that support program improvement.

Further, Current Indicators of Teaching Effectiveness

We graduate candidates who are classroom-ready. The college measures candidate's teaching effectiveness through the ST-1 and state mandated Teacher Performance Assessments. The State of California has begun to survey program completers, who are classroom teachers, to ask for evidence of their understanding of the link between their teaching effectiveness and teacher education program. Each program each year receives these data and has an opportunity to review and adjust based on results. As we have only received two cycles of these data 2017 and 2018, we are awaiting our 2019 data to begin to focus on trend analysis.

Access, security, reliability of the data the Attallah College uses starts with the Program Assessment and Improvement Unit (PAI). The PAI assures security of sensitive student data, and through training on university systems conducts queries for the faculty and staff to access data for decision making by programs to inform continuous improvement. The PAI includes a director and a coordinator who both participate in continuous learning and to support improvement in data collection and dissemination in order to remain current about data management, software tools used by the University and the various systems that house data and that are used for reporting. Chapman Institutional Research Office is the primary student information system for the University, originally using the University’s Data with additional data collected from Taskstream, PeopleSoft, as well as program level data.

References

Baker, Eva L., Paul E. Barton, Linda Darling-Hammond, Edward Haertel, Helen F. Ladd, Robert L. Linn, Diane Ravitch, Richard Rothstein, Richard J. Shavelson, and Lorrie A. Shepard. 2010. “Problems with the Use of Student Test Scores to Evaluate Teachers.” Economic Policy Institute Brie ng Paper 278.

Bill & Melinda Gates Foundation. (2010). Learning about teaching: Initial findings from the Measures of Effective Teaching Project. Seattle, WA: Author.

Chaplin, D., Gill, B., Thompkins, A., & Miller, H. (2014). Professional Practice, Student Surveys, and Value-Added: Multiple Measures of Teacher Effectiveness in the Pittsburgh Public Schools. REL 2014-024. Regional Educational Laboratory Mid-Atlantic.

Corcoran, Sean P. (2010). Can Teachers be Evaluated by Their Students’ Test Scores? Should they Be? The Use of Value-Added Measures of Teacher Effectiveness in Policy and Practice. Providence: Annenberg Institute for School Reform at Brown University.

Hill, H. C., Kapitula, L., & Umland, K. (2011). A validity argument approach to evaluating teacher value-added scores. American Educational Research Journal, 48(3), 794-831.

Mangiante, E. M. S. (2011). Teachers matter: Measures of teacher effectiveness in low-income minority schools. Educational Assessment, Evaluation and Accountability, 23(1), 41-63.

5.5 The provider assures that appropriate stakeholders, including alumni, employers, practitioners, school and community partners, and others defined by the provider, are involved in program evaluation, improvement, and identification of models of excellence.

The Attallah College posts measures of completer impact on our website, share them at program meetings, share ongoing data trends, including enrollments, and graduation rates, at monthly program meetings, program meetings. The Associate Dean for Graduate Studies and the Director of Teacher Education examine and monitor impact and trends in enrollment and certification. These data are shared with the College leadership team at the Dean's meetings. Admissions data are tracked by the Office of Program Improvement and Assessment and shared bi-annually as part of the program data packages (which serve as data for analysis and continuous improvement with each program).

[1] Candidates will be selected based on ensuring sample is representative of program completers including education specialists inclusive of mild/moderate and moderate/severe, multiple subject, single subject, as well as MAT and MACI program completers.

[1] All instruments denoted with an * have only been administered with two cycles as of this submission. We expect to administer them two additional cycles prior to our onsite visit, thus allowing for a full 3 cycles of data availability.

[2] This survey has been updated (as noted in table 5.1) and will be administered (SP 19)